Analog compute is the key to powerful, yet power-efficient, AI processing

By Tim Vehling, senior vice-president of product and business development at Mythic Inc.

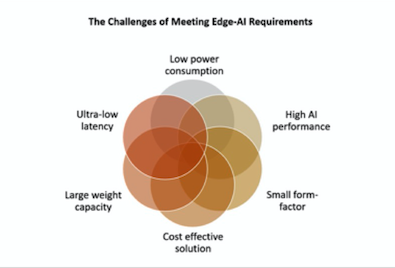

Automation / Robotics Electronics Engineering AI artificial cameras Editor Pick intelligence processing security solutions surveillanceAs artificial intelligence (AI) applications are becoming more popular in a growing number of industries, the need for more throughput, more storage capacity and lower power is becoming increasingly important. At the same time, machine learning models are growing at an exponential rate. With these models, traditional digital processors struggle to deliver the necessary performance with low enough power consumption and adequate memory resources, especially for large models running at the edge. This is where analog computing comes in, enabling companies to get more performance at lower power consumption in a small form-factor that’s also cost-efficient.

The power advantages of analog compute at the lowest level comes from being able to perform massively parallel vector-matrix multiplications with parameters stored inside flash memory arrays. Analog compute with flash storage is also referred to as analog compute in-memory. Tiny electrical currents are steered through a flash memory array that stores reprogrammable neural network weights, and the result is captured through analog-to-digital converters (ADCs). By leveraging analog compute for the vast majority of the inference operations, the analog-to-digital and digital-to-analog energy overhead can be maintained as a small portion of the overall power budget and a large drop in compute power can be achieved. There are also many second-order system level effects that deliver a large drop in power; for example, when the amount of data movement on the chip is multiple orders of magnitude lower, the system clock speed can be kept up to 10x lower than competing systems and the design of the control processor is much simpler.

Math operations for high performance AI

Today’s digital compute approaches focus on optimizing for peak benchmarks and have only delivered incremental advances to chip architectures. Analog compute offers a much better alternative for high-end edge AI requirements. Analog compute solves the issue of the memory bottleneck, while also offering major advantages in system-level power, performance, and cost. From the perspective of performing all of the math operations for high performance AI, analog compute systems have the best power efficiency both in the short run and long run.

Using analog compute processors for edge-AI applications is a great option for many different use cases. For example, drones equipped with high definition cameras for computer vision (CV) applications can require running complex AI networks locally to provide immediate and relevant information to the control station. Processors that use analog compute make it possible to deliver powerful AI processing that’s also extremely power-efficient so companies can deploy these networks on the drone for a wide range of CV applications. These applications include monitoring agricultural yields, inspecting critical infrastructure such as power lines, cell phone towers, bridges and wind farms, inspecting fire damage and examining coastline erosion.

AI-powered collaborative robots

Manufacturing is another industry that is ripe for AI transformation. Most factories today are largely automated and many have deployed computer vision (CV) to optimize production lines, which has significantly increased efficiency and driven down costs. Today we’re seeing factories become even more automated with interactive human-to-machine processes. AI-powered collaborative robots are working alongside humans to make factories a much safer environment and become even more productive. Industrial robots are also using CV to inspect products on the production line in real time, such as inspecting cereal boxes before they are shipped off to grocery stores.

Another example of an industry that can benefit from analog compute is enterprise. In many applications edge data are moving to the cloud where data centers run neural networks at scale across all their data streams. Companies can use AI processors to support a broad range of neural networks and deploy them into existing infrastructure, helping to cut the cost of ownership. Since neural network inference is compute-intensive and power-hungry, traditionally it has required costly hardware, advanced cooling infrastructure, and kilowatts of power. Analog compute in-memory based processors are extremely power-efficient and scalable so they can easily fit into companies’ existing infrastructure.

Next level of safety and privacy

Additionally, security cameras and surveillance solutions are a great example of an application ideal for AI and analog compute. In current systems, cameras capture images of people and objects and send that information to a command center for visual analysis; this is where the uneasiness comes with being monitored constantly. A better alternative is to have cameras that use trained AI algorithms to detect specific sequences – accidents, crimes, or other events – and only send the footage of potential security incidents for analysis. This approach delivers the safety and security the public desires while providing the privacy all of us expect. Cameras today are already equipped with computer vision techniques. To reach the next level of safety and privacy, high performance edge-AI capabilities in the camera will be required for traffic monitoring, incident detection, facial recognition, and many more applications to come in the future.

Analog compute is the ideal approach for AI processing because of its ability to operate using much less power at a higher speed with faster frame-rates. The extreme power efficiency of analog compute technology will let product designers unlock incredibly powerful new features in small edge devices, and will help reduce costs and a significant amount of wasted energy in enterprise AI applications.

——————————————–

Tim Vehling, senior vice-president of product and business development at Mythic Inc.

About Mythic

Founded in 2012, Mythic has developed a unified hardware and software platform featuring its unique Mythic Analog Compute Engine (Mythic ACE) to deliver enhanced power, cost, and performance that shatters digital barriers preventing AI innovation at the edge. The Mythic Analog Matrix Processor (Mythic AMP) makes it much easier to deploy powerful AI solutions, from the data center to the edge device. The firm has offices in Redwood City, CA and Austin, TX.