Bringing touchless control to the industrial IoT

By Alex Dopplinger, director product marketing – building and energy, NXP Semiconductors

Automation / Robotics Electronics Engineering IoT controls HMIs IIoT Industrial IoT touchlessPandemic has shifted demand for alternative interfaces to reduce the need for physical contact

Many people use human machine interfaces (HMIs) with sleek glass touch screens and ubiquitous buttons daily, in homes, vehicles, workplaces, and public venues. The increasing spread and severity of the COVID-19 virus has heightened concern about touching the same buttons or screens as multiple other people. The pandemic has suddenly shifted the demand for alternative interfaces to reduce the need for physical contact.

It is not yet entirely understood how the virus spreads. However, a recent study suggests that SARS-CoV-2 may remain viable on surfaces such as glass, plastic, and steel for up to two or three days. This makes it even more important to implement touchless alternatives for humans to interact with machines in the workplace, retail, and hospital settings.

Introducing touchless controls

The Industrial Internet of Things (IIoT) automates manufacturing and smart machine communications, but there are still times when humans must interact with machines. To reduce germ and virus transmission, we need touchless alternatives to the traditional push-button or touchscreen controls.

Many users are familiar with voice assistant applications at home or in vehicles. However, this type of voice control is unreliable in noisy manufacturing facilities, active outdoor environments, or in groups of speaking people. For these cases, speech and gesture can be combined to give a more adaptable and robust multi-modal touchless interface.

With voice or vision-controlled systems, machines must quickly and reliably differentiate between deliberate user instructions and random or unintended inputs. For example, a machine should only turn on when the user intends this response, and not simply because a person is standing near it and talking. Machine vision systems can recognize gestures such as a hand movement, nod of a head, wave of a foot, and finger-pointing. Interpreting body language can become a more natural way for machines to respond to visible inputs from human operators.

Gesture-based solution development

The first step to develop a gesture-based solution is to identify which gesture types the system must recognize and interpret. For example, will the user communicate using hands only, or by a full-body movement? Will finger movements be easier for the vision system to capture than a whole body, which could be partially obscured by clothing or other items being carried?

Gesture complexities are also important design parameters. For instance, opening a door might need only a single hand wave, but adjusting environmental controls or changing a production line might require a range of intricate gestures.

Finally, the speed of the movement and environmental conditions can play a significant role – for example, when lighting levels are low or too bright. Understanding all these factors helps determine the number and type of camera sensors required, the field of view, the focal length, and the resolution required to detect and interpret the gesture.

It is also recommended to offer a back-up interface, such as voice control or a physical touch screen, in case the user cannot use the gesture method. For safety-critical functions in industrial environments, the application software may need a functional safety assessment and certification, such as IEC 61508 for industrial systems.

Turning concept into reality

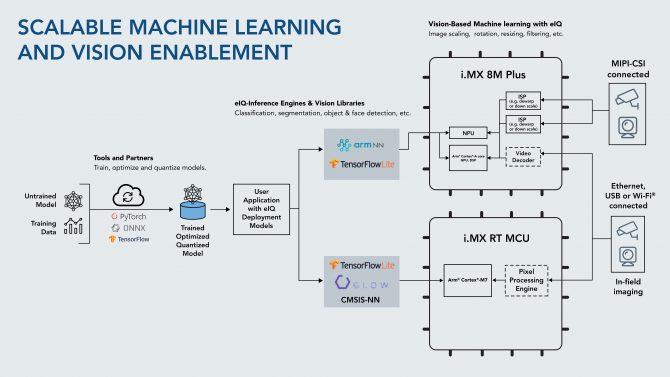

After the gesture, environment, and camera types are understood, we must acquire or build a gesture-recognition machine learning model. The left side of Figure 1 shows the steps needed to convert gesture examples into an inference engine – the algorithm which actually recognizes the gesture. TensorFlow, ONNX, and Pytorch are some commonly-used tools for this purpose.

Only now can we identify the appropriate hardware and software. Gesture recognition systems are typically built on industrial-grade embedded platforms ranging from a single, smart camera connected to a general-purpose computing core, to multiple camera sensors feeding multicore processors with highly-optimized vision and machine learning accelerators. Figure 1 shows two such options for a gesture-recognition system, recommending an i.MX RT microcontroller platform for simpler systems, and the NXP i.MX 8M Plus applications processor for more complex or faster-responding gesture and vision systems.

Stereo vision cameras can use either MIPI-CSI, USB, or Ethernet connections, together with audio inputs to recognize speech, and sound generators to provide audible user feedback. A display panel can also give visual instructions and feedback to the user and may incorporate back-up touchscreens in case the contactless control fails or will not be used.

The fastest way to get started is to leverage an existing embedded platform and toolkit. For example, the Toradex Apalis i.MX8 Embedded Vision Starter Kit is an industrial-grade single-board solution based on an NXP i.MX 8 applications processor, combined with an Allied Vision sensor, which leverages Amazon Web Services (AWS) development tools for the object recognition task. This kit can collect gesture examples, and transfer them to the AWS tools to train the gesture-recognition models. The resulting inference engine can then be loaded back onto the same kit to recognize the gestures and inform the machine how to respond.

Conclusion

Machine vision systems are set to surge in popularity as the demand for contactless user interfaces rises. This need exists across a wide range of applications, including retail, smart building, healthcare, industrial, and entertainment.

Touchless controls will not only keep users safe but also improve the way humans interact with machines in the industrial and manufacturing environments. Existing hardware and software sub-modules can be leveraged to build cost-effective gesture-based controls, which are responsive and reliable, enabling a new era of touchless user interfaces that will help industry to continue in the new normal.

————————————-

Alexandra Dopplinger, P.Eng., is director product marketing, Building and Energy, for NXP’s Edge Processing Business Line. https://www.nxp.com/applications/solutions/enabling-technologies/edgeverse:EDGE-COMPUTING