Deeplite accelerates AI on Arm CPUs using ultra-compact quantization

EP&T Magazine

Automation / Robotics Electronics AIDeepliteRT allows customers to utilize existing Arm CPUs for computer vision at the edge

Deeplite, a Montreal-based provider of AI optimization software, has added Deeplite Runtime (DeepliteRT) to its platform.

Designed to make AI model inference faster, more compact and energy-efficient, DeepliteRT makes AI models even smaller and faster in production deployment, without compromising accuracy. Users stand to benefit from lower power consumption, reduced costs and the ability to utilize existing Arm CPUs to run AI models.

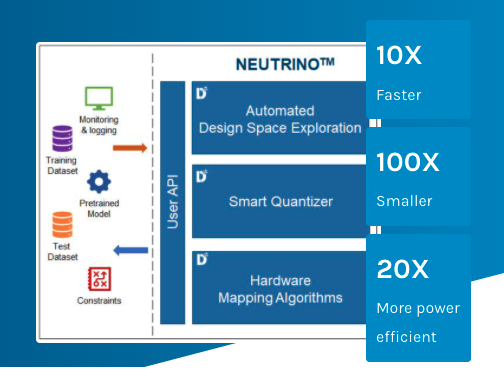

DeepliteRT builds upon the company’s existing inference optimization solutions, including Deeplite Neutrino.

“Multiple industries continue to look for new ways to do more on the edge. It is where users interact with devices and applications and businesses connect with customers. However, the resource limitations of edge devices are holding them back,” said Nick Romano, CEO and co-founder at Deeplite. “DeepliteRT helps enterprises to roll out AI quickly on existing edge hardware, which can save time and reduce costs by avoiding the need to replace current edge devices with more expensive hardware.”

Builds upon existing inference optimization solutions

Deeplite has partnered with Arm to run DeepliteRT on its Cortex-A Series CPUs in everyday devices such as home security cameras. Businesses can run complex AI tasks on these low-power CPUs, eliminating the need for expensive and power-hungry GPU-based hardware solutions that limit AI adoption.

DeepliteRT builds upon the company’s existing inference optimization solutions, including Deeplite Neutrino, an intelligent optimization engine for Deep Neural Networks (DNNs) on edge devices where size, speed and power are often major challenges. Neutrino automatically optimizes DNN models for target resource constraints. Neutrino inputs large, initial DNN models that have been trained for a specific use case and understands the edge device constraints to deliver smaller, more efficient, and accurate models.

“To make AI more accessible and human-centered, it needs to function closer to where people live and work and do so across a wide array of hardware without compromising performance. Looking beyond mere performance, organizations are also seeking to bring AI to new areas that previously could not be reached, and much of this is in edge devices,” said Bradley Shimmin, Chief Analyst for AI Platforms, Analytics, and Data Management at Omdia. “By making AI smaller and faster, Deeplite is helping to bring AI to new edge applications and new products, services and places where it can benefit more people and organizations.”