Autonomous vehicles drive AI chip innovation

By Bin Lei, co-founder & senior vice-president at Gyrfalcon Technology Inc.

Automation / Robotics Electronics Engineering Software Engineering AI artificial automotive autonomous intelligence vehiclesAI requires a specific architecture that is more appropriate for AI application processing

The technology in our cars is undergoing a radically dynamic transformation. Software monitors the engine, plays the music, alerts the driver to oncoming traffic hazards and provides so many more functions. However, the old adage that software is slow and chips are fast is supremely relevant as vehicles become autonomous. No room for error exists when the car is driving itself and as we get closer to full autonomy chips will need to usher in the next generation of innovation.

Q. Why are cutting-edge chips necessary for Edge AI?

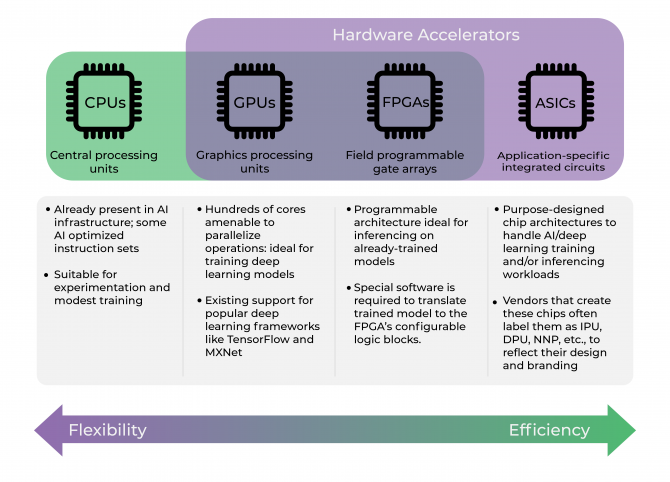

AI requires a specific architecture that is more appropriate for AI application processing. The trend is to use Tensor architecture as opposed to Linear or Vector processing that is typically used in CPU, DPU or GPU, respectively. Cutting-edge chips or dedicated co-processors are becoming the mainstream for on-device, edge, and even cloud AI processing. Edge AI has other benefits (besides its architecture) in terms of its locality, privacy, latency, power consumption limitation, and mobility support.

Source: Gyrfalcon Technology Inc.

Q. Why is autonomous driving so difficult from a tech perspective?

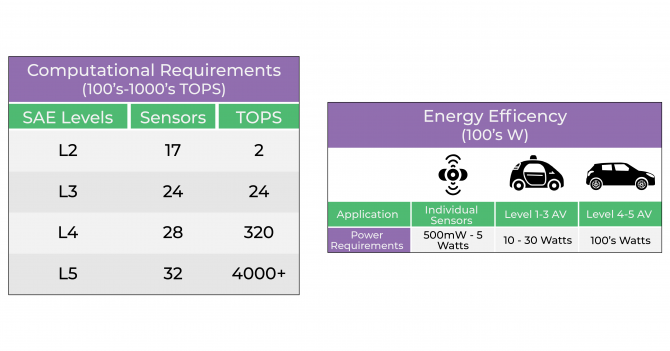

Autonomous vehicles require processing massive data captured by the sensors (camera, LiDAR, Radar, and Ultrasound). And it has to provide real-time feedback, such as traffic conditions, events, weather conditions, road signs, traffic signals and others. This requires high trillions of operations per second (TOPS) to process multiple challenging tasks (eg, object extraction, detection, segmentation, tracking, and more) simultaneously. It also consumes high power consumption depending on the operation. Lastly, high speed processing, reliability and accuracy are very important and need to be better than humans.

Q. Describe the computational challenges for autonomous vehicles above L3.

Currently, most autonomous vehicles are using GPU (graphic processing chip) for their core AI processing. GPU is not as fast or cost-effective as a custom chip (ASIC). Ultimately, we need a dedicated AI autonomous processor. However, one of the biggest issues is power consumption. For above L3 to work flawlessly you need 100’s and 1000’s of watts to process real-time HD input from multiple cameras, radar, LiDAR, etc. That’s an enormous power requirement. It essentially means you need a dedicated battery for processing.

Source: Gyrfalcon Technology Inc.

Q. For autonomous AI, what chips are most widely used (graphics processing units (GPUs), field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), or central processing units (CPUs)?

CPUs are general-purpose processors with linear architecture. Ideally, it is desirable to use CPUs for more general (but important) non-AI tasks. Overloading CPUs by AI processing should be avoided if possible. GPUs are traditionally used for graphics and gaming. However, due to its flexibility and relatively high processing power, It can be used for AI training and edge applications. There are other issues such as the data transfer between the GPU and the CPU ends up being one of the big constraints of the system. Other main disadvantages of GPUs are power consumption and cost. GPUs are mostly used for cloud AI and its flexibility and configurability capabilities. ASICs are more appropriate for application-specific tasks with large computing processing, low cost, and power efficiency requirements, such as AI applications. Using an AI dedicated co-processor (ASIC) for AI applications is becoming mainstream particularly for Edge applications. We believe in the future, for autonomous driving, there will be dedicated processors and systems to serve the purpose that will not be GPU-based.

Q. Why is Edge computing so important for the future?

Edge computing is important due to the following features: Locality, low latency, privacy/security, mobility support and power consumption restriction.

Q. If autonomous AI makes a mistake, lives could be at risk. How can manufacturers ensure these mistakes aren’t made?

If you look back at accidents or issues with their autonomous driving, every single case was a new case that the machine learning did not quite know how to react or reacted wrongly. It takes time, but we must do it correctly. Over 90% of car accidents are due to human errors and although human mistakes can be forgiven, machine errors are not. AI accuracy can be increased due to learning capabilities and algorithms used. Besides algorithms, infrastructure and government regulations are important to make autonomous driving possible. Above L3 (L3+, L4, and L5) scenarios are currently being evaluated by several top tier companies in highway road conditions.

Q. What is the role of sensors (ie, cameras, LiDAR, ultrasonic, and radar)?

Cameras are typically used for vision processing, which is becoming aware of the surrounding environment for object detection, identification, segmentation, (lane) tracking, blind-spot monitoring, parking assist, traffic sign recognition, and color information. Cameras typically do not provide distance information. LiDAR is mostly used for 360-point detection with high accuracy and resolution. It can be used for traffic jams, AEB (automatic emergency brake), highway pilot, and more. LiDAR is very expensive and offers no color information. Radar is used for object detection with high-to-low resolution and is useful at long range, but cannot distinguish what the objects are. Ultrasonic is often used for parking assist, blind spots, ACC (automatic cruise control) with stop and go. Ultrasonic is useful for cruise control, collision avoidance, distance sensors, and weather resistance with low resolution.

Q. For self-driving cars, chips are essential in enabling their “brain” and “eyes” to work. How can AI empower these sensors?

They do it in three ways. First, sensing the surrounding environment is captured with cameras inside/outside, LiDAR, radar, and ultrasonic. Then perceiving through AI compute processing, algorithms, training, inferencing, and data/sensor fusion, which is used for data structuring, segmentation, object detection, and video understanding. Next is planning with context-awareness, path planning, and task prioritization. Lastly, is actuate/control which is steering, braking, acceleration and engine and transmission control.

Q. What can we expect in the next 3 years with AI-empower sensors?

Image sensors cover a broad range of applications, ranging from smartphones to machine vision and automotive. The CMOS imaging sensor (CIS) market will continue its disruptive innovation and growth both in the area of sensing and AI. In addition to basic functionalities for autonomous vehicles, OEMs are moving to include deep learning algorithms for object recognition and segmentation often used in ADAS levels. Giant companies and startups continue to offer services based on L3+ including Robo-taxis, intelligent public transportation. These emerging applications and systems have rapidly surrounded themselves with rich, diversified ecosystems, particularly in terms of sensors and computing. Image signal processors (ISP) for high-end AI applications will require a sophisticated vision processor along with an accelerator. The hardware can be either a standalone system-on-chip (SoC) or a combined SoC+AI processor pushing the volume and revenue of ICs. AI-powered cameras in addition to automotive, have other applications in smart cities, smart home/retail, industrial IoT, smart traffic & resource management, and security, including surveillance, detection, and recognition. These applications imply the development of powerful ISPs and vision processors for handling today’s analytic algorithms and tomorrow’s AI with deep learning capabilities.

——————————————

Bin Lei is co-founder and senior vice-president at Gyrfalcon Technology Inc. (GTI), leading developers of high performance AI accelerators that use low power, packaged in low-cost and small sized chips. https://www.gyrfalcontech.ai