IBM unveils its Telum Processor

EP&T Magazine

Electronics Semiconductors Processor semiconductorChip delivers a centralized design allowing clients to leverage the full power of the AI processor for AI-specific workloads

At the annual Hot Chips conference, IBM unveiled details of the upcoming new IBM Telum Processor, designed to bring deep learning inference to enterprise workloads to help address fraud in real-time. Telum is IBM’s first processor that contains on-chip acceleration for AI inferencing while a transaction is taking place. Three years in development, the new on-chip hardware acceleration is designed to help customers achieve business insights at scale across banking, finance, trading, insurance applications and customer interactions. A Telum-based system is planned for the first half of 2022.

Businesses typically apply detection techniques to catch fraud after it occurs, a process that can be time consuming and compute-intensive due to the limitations of today’s technology, particularly when fraud analysis and detection is conducted far away from mission critical transactions and data. Due to latency requirements, complex fraud detection often cannot be completed in real-time – meaning a bad actor could have already successfully purchased goods with a stolen credit card before the retailer is aware fraud has taken place.

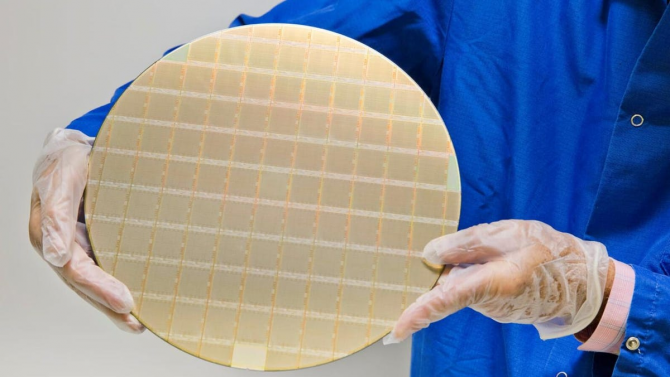

Source: IBM

According to the Federal Trade Commission’s 2020 Consumer Sentinel Network Databook, consumers reported losing more than $3.3 billion to fraud in 2020, up from $1.8 billion in 20192.

Telum’s chip features a centralized design, which allows clients to leverage the full power of the AI processor for AI-specific workloads, making it ideal for financial services workloads like fraud detection, loan processing, clearing and settlement of trades, anti-money laundering and risk analysis.

Telum contains 8 processor cores with a deep super-scalar out-of-order instruction pipeline, running with more than 5GHz clock frequency, optimized for the demands of heterogenous enterprise class workloads. The cache and chip-interconnection infrastructure provides 32MB cache per core, and can scale to 32 Telum chips.

IBM Research also recently announced scaling to the 2 nm node. In Albany, NY, home to the IBM AI Hardware Center and Albany Nanotech Complex, IBM Research has helped to create a collaborative ecosystem with public-private industry players to fuel advances in semiconductor research, helping to address global manufacturing demands and accelerate the growth of the chip industry.