Smart speech/voice-based tech market growing

EP&T Magazine

Automation / Robotics Electronics Engineering Software control voice Voice-enabledIDTechEx Research forecasts that it will reach $ 15.5 billion by 2029

Natural human-machine interface is shaping our life. From punch cards, to keyboards, from mouse to touch screens, technologies have shaped the way how human interact with machines. “Human-machine interface” (HMI) began as “computer interface”. That is because early computers were not interactive and gradually “human-machine interface” became “human-machine interaction”. “Interaction” is the first revolution occurred in the development of human-machine interface. Now we are experiencing the transition to “natural user interface”, which is considered to be the second revolution of HMI. Machines/computers can interpret natural human communication and they communicate more like humans.

Compared with keyboards and mouses, touch is considered as a natural interaction. Apart from touch, audio and vision modalities can also provided new ways of interactions.

It is hands-free, eyes-free and keyboard-free

Figure1 Evolution of human-machine interactions Speech/Voice-based interaction

Speech enables a convenient integration. It is hands-free, eyes-free and keyboard-free. As talking is natural for most of us and it does not require us to learn new skills, the learning curve is low. Human can speak 150 words on average per minute compared with 40 when typing. Speech interaction can be quickly mastered by young generations, old people, disabled people and illiterate people. It can also be applied in occasions and devices where common interactions are challenging such as while driving, without light, or in extremely small wearables. These advantages make speech an increasingly popular media for devices and applications.

Speech recognition (SR) is the “ear” of a machine, which is the basis for speech user interface as the input enables the whole interaction process. Speech recognition was first introduced in 1920s when a toy dog “Radio Rex” could come when his name was called. Speech user interface was also applied in vehicles in early times. However, the poor recognition accuracy and bad user experiences stopped it going further. Since 1993, the accuracy of speech recognition had been stagnated around 70% based on traditional model, which led to the poor user experiences as users could easily get frustrated and lose patience during the process. It was machine learning, or more specifically, deep learning, that significantly increased the accuracy of speech recognition in 2010s when they have been proved to be effective in improving the recognition accuracy. In 2016, Microsoft reported a speech recognition system reached human parity with a word error rate of 5.9% and in 2017, Google reported an accuracy of 95%. The technology improvement indicates that machines can be as good as human beings in terms of “hearing” and now speech recognition has become a commodity.

Giants such as Apple, Amazon, IBM, Google and Microsoft, all have efforts on smart speech. Besides the “ear”, it is also vital for the machines to have the “brain”, “mouth” and other organs to realize natural language speech interactions. In this process, emerging technologies and business models are established.

Speech is language-dependent, making the global market more complicated and segmented. However, the general focus of global market is English-centric interactions, with a few popular language systems developed by local players due to their strengths in language data.

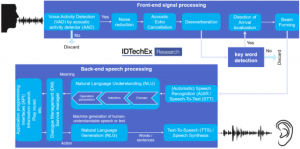

Figure 2 Spoken dialogue system processe

The report from IDTechEx Research provides an introduction of different technologies from both hardware and software point of view from the scratch. They are listed as following:

- Voice-enabled smart speakers

- Microphone arrays

- MEMS speakers

- Voice system on Chip

- Machine learning

- Front-end signal processing

- Key word spotting

- Automatic speech recognition

- Natural language understanding

- Speech synthesis

- Voice recognition

- Machine translation

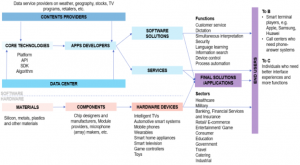

Figure 3 Value chain

Market landscape, business models and value chains are analyzed in the report, with a ten-year market forecast in the angle of revenue model and applications for the following sectors:

- Automotive

- Banking, Financial and insurance

- Healthcare

- Travel, hotels

- Retail/commerce

- Home automation

- Education

- Game & Entertainment

- Voice-enabled smart speakers