AdHawk Microsystems achieves funding led by Intel Capital

Stephen Law

Automation / Robotics Electronics Embedded Systems Optoelectronics Regulations & Standards Wireless Engineering Supply Chain Wearable Technology AR human-computer interaction VRFunding will bring research-grade eye tracking to consumer VR/AR headsets

Kitchener ON-based AdHawk Microsystems has raised US$4.6-million in funding as it strives to bring the world’s first camera-free eye tracking system to market – a major advance that paves the way for a new generation of virtual reality/augmented reality devices capable of more immersive experiences in gaming, healthcare, training and beyond.

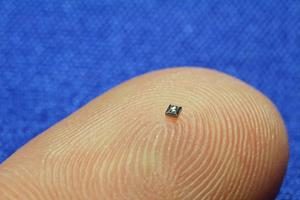

FIG. 1: This photo shows the size of the technology for eye tracking developed by AdHawk Microsystems.

Intel Capital led the Series A investment round, with participation from Brightspark Ventures and the founders of AdHawk, which develops advanced microsystems for human-computer interaction.

Current VR/AR headsets equipped with eye tracking systems rely on cameras to keep track of where the user is looking, and it takes immense computing power to process the hundreds of images per second the cameras capture. As a result, these headsets need to be tethered to a power supply and a high-end computer.

Ultra-compact micro-electromechanical systems

AdHawk’s eye tracker replaces the cameras with ultra-compact micro-electromechanical systems – known as MEMS – that are so small they can’t be seen by the naked eye. These MEMS eliminate power-hungry image processing altogether, resulting in order-of-magnitude improvements in the speed, form factor and energy efficiency of the VR/AR units that carry them, while delivering resolution on par with expensive, research-grade systems.

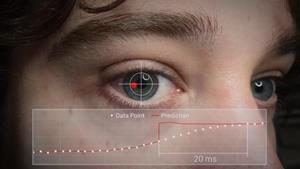

The AdHawk system can accurately predict where the user will look next, up to 50 milliseconds (50 one-thousandths of a second) in advance. This capability will enable designers to enhance the element of surprise for gamers, help optimize the placement of ads in VR/AR media, render portions of scenes in advance and provide negative latency to make human-computer interaction seamless.

AdHawk CEO and co-founder Dr. Neil Sarkar, whose microsystems research at the University of Waterloo won awards and broke new ground, said his company’s tiny eye tracker has the potential to set a new standard for VR/AR user experience.

FIG. 2: This graphic shows the potential of the eye tracking technology developed by AdHawk Microsystems to predict eye movements.

“Creating a sense of total immersion, through an untethered, responsive and unobtrusive headset, is the ultimate goal of the VR/AR community,” said Dr. Sarkar. “We believe our technology will go a long way to enabling headset makers to deliver that experience to their users.”

Eye tracking is a fundamental enabling technology

Recent mergers and acquisitions in the eye tracking space led by Apple, Google, and Facebook came as no surprise to the VR/AR community, as headset manufacturers have long known that eye tracking is a fundamental enabling technology. Eye tracking promises to reduce processing overhead by rendering only the highest acuity region in the eye’s field of view in full resolution, while reducing resolution (and increasing contrast) in the periphery. This approach, known as foveated rendering, is expected to greatly reduce power consumption and latency to make today’s tethered VR experiences obsolete and to mitigate discomfort. Menu navigation, creating virtual eye contact, and targeting while gaming are just a few other examples of eye tracking applications.

Replacing cameras with AdHawk’s tiny, low-power devices – which can operate for a full day on a coin-cell battery – will enable headset manufacturers to provide eye tracking without the need for tethering.

AdHawk’s device is currently available for purchase as an eye tracking evaluation kit. “The company has already gleaned worthwhile information from user testing,” said Dr. Sarkar. “We have discovered that when we take thousands of eye position measurements per second to capture the dynamics of eye movements within saccades [the eye’s rapid, abrupt movements between fixation points], we get valuable insight into the state of the user – are they tired, interested, confused, anxious? Where exactly will they look next? This information can be fed back into the VR/AR experience to greatly enhance immersion.”

AdHawk is developing additional sensor technologies to create new experiences for its users and is working on capturing and analyzing data from multiple sensors to derive greater insight. Upcoming products include a low-power, ultra-precise gesture sensor and a point cloud scanner module for super-resolution 3D sensing.